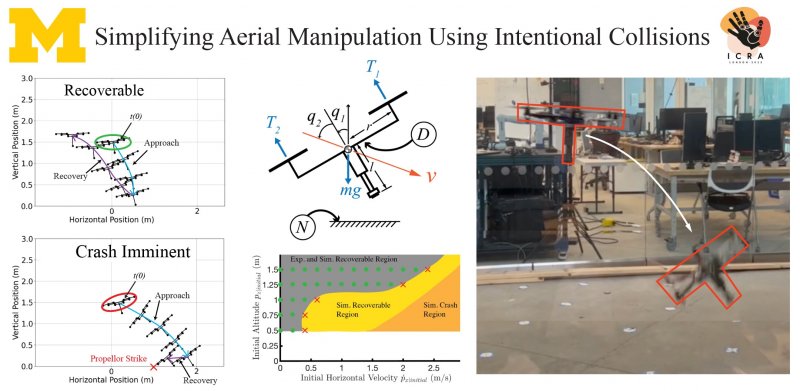

Simplifying Aerial Manipulation Using Intentional Collisions

Aerial manipulation describes a process that includes physical interaction between an unmanned aircraft system (UAS) and its environment. We aim to apply aerial manipulation to sample leaves and small branches from rainforest trees. Current approaches to aerial manipulation involves extended periods of UAS-environment interaction, during which forces and moments can lead to a loss in attitude or position control in underactuated multicopters. By adapting intelligent foot placement strategies found in dynamically stable hopping robots, this work proposes a strategy involving carefully managed intentional collisions between the UAS and its environment. We designed an attitude controller denoted a Velocity Matching controller that aligns a UAS-mounted pogo-stick foot with the center of mass velocity vector during collision approach to maximize UAS ability to recover a hover state after collision. We propose the use of a flight envelope involving altitude and horizontal speed states to assess recoverability prior to initiating each approach to collision. We identify this flight envelope from a simulation study built on a model of flight in Conventional Waypoint Following and Velocity Matching control modes as well as a model of collision response. Experimental flight testing evaluates the simulation-based envelope resulting in an actual envelope that is somewhat smaller but similarly shaped to the envelope identified in simulation. (by: Mark Nail)

Communication is a Two-Way Street: Negotiating Driving Intent through a Shape-Changing Steering Wheel

In this information age, our machines have evolved from tools that process mechanical work into computerized devices that process information. A collateral outcome of this trend is a diminishing role for haptic feedback. If the benefits of haptic feedback, including those inherent in tool use, are to be preserved in information processing machines, we require an improved understanding of the various ways in which haptic feedback supports embodied cognition and supports high utility exchange of information. In this paper we classify manual control interfaces as instrumental or semiotic and describe an exploratory study in which a steering wheel functions simultaneously to communicate tactical and operational features in semi-autonomous driving. A shape-changing interface (semiotic/tactical) in the grip axis complements haptic shared control (instrumental/operational) in the steering axis. Experimental results involving N=30 participants show that the addition of a semiotic interface improves human-automation team performance in a shared driving scenario with competing objectives and metered information sharing.